Defining what and how to learn from datasets

When running a machine learning algorithm on a dataset, there are many different ways to go about training. Also, you can evaluate the performance based on many different criteria or measures. It can be difficult to track all the different setups used and your results can get mixed up. It is important to save how you trained an algorithm and train others in the same way to get comparable results. This is not only important when working alone, but even more beneficial in collaborative projects.

On PortML you can define and store how you train and evaluate algorithms on a dataset in a so called ‘task’. The performance of your algorithm is stored on the respective task and all algorithms run on the task can easily be compared. In this blog, we will explore how to define and store tasks on PortML using the Python API.

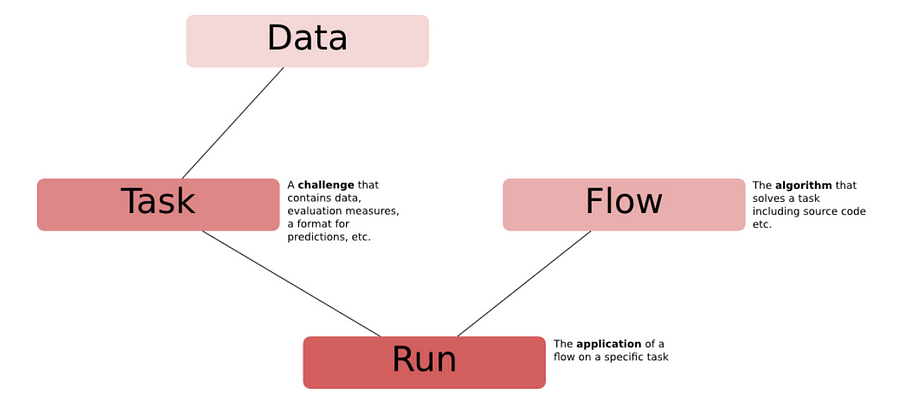

Tasks

In another blog, we showed how to store datasets on PortML. A dataset alone does not constitute an experiment. We must agree on what types of results are expected to be shared and how they are evaluated. This is expressed in so called ‘tasks’. A task is attached to a dataset and defines what types of inputs are given, which types of output are expected to be returned and what protocols should be used.

For example, one could define a classification task with a certain cross-validation procedure, a target feature and predictive accuracy as evaluation measure. Once a task is formulated on a dataset and stored on PortML, it can easily be retrieved and reproduced, improving reproducibility and collaboration!

Connecting to PortML

To get the dataset on PortML, we must first install OpenML. Open the command prompt and use pip to easily install openml:

pip install openml

OpenML is an open platform build by machine learning experts for sharing datasets, algorithms and experiments with the goal of improving collaboration and reproducibility in the machine learning community. Being an open source project, OpenML is constantly improving and evolving by contribution from the community. PortML is working together closely with OpenML to offer the rich functionalities and tools of OpenML to corporates and institutions in the form of a managed, secured platform.

We do not want to use the OpenML platform, but we can use the Python API (see docs) to connect to our PortML server. For this you will need your API-key which you can find on your profile. Connect to PortML using:

import openml openml.config.server = "PORTML_SERVER_URL" openml.config.apikey = "YOUR_API_KEY"

Defining a task

Alright, let’s define a task! We will be using the iris dataset we uploaded in a previous blog. We want to classify the type of iris plant, the obvious target in this case. More important is the way we go about training and how we evaluate results. Say we want to use a 10-fold cross-validation procedure and we want to evaluate our performance based on accuracy. We can define this as a task object using create_task, see the code snippet below.

my_task = openml.tasks.create_task(

task_type=openml.tasks.TaskType.SUPERVISED_CLASSIFICATION,

dataset_id=5,

target_name="class",

estimation_procedure_id=1,

evaluation_measure="predictive_accuracy",

)

my_task.publish()

Notice that we have to specify the dataset id, because tasks are attached to datasets, as mentioned earlier. It is possible to define multiple tasks on the same dataset: datasets and tasks have a one-to-many relationship. For more details on the attributes of create_task, see the docs.

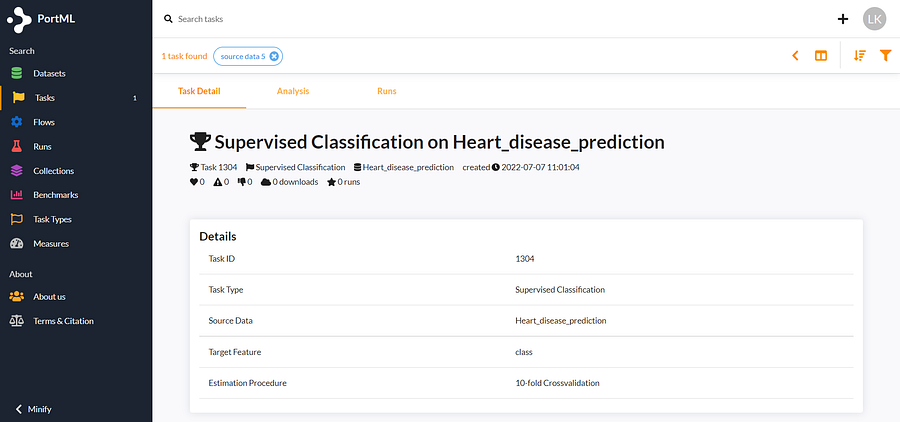

On the last line we upload our newly defined task to our secured PortML server. Now we can also view our task in the web interface:

There we go! We have defined the set-up of an experiment on the iris dataset and uploaded it to PortML for our team to see. Now collaboration will be seamless as we can be sure that we are working on the exact same experiment.

Conclusion

Defining and storing experiments in structured way is essential in improving reproducibility and collaboration in a machine learning workflow. We were able to do this by making use of OpenML’s task functionality to easily store and share our experiment set-up to our secured PortML cloud.

Try it out on OpenML!

As mentioned in the blog, PortML is bringing the functionalities and tools from OpenML to a managed, secured platform. Curious to get a feel of the platform? Go to openml.org, create an account and test it out!